Airflow is a platform to schedule and monitor workflows. It is written in Python and worked as a python library. Recently I have some work experience about it so let' see some basic usages in this article.

Install and Start

1. prepare python environment.

I use conda to create a new python3.6 environment.

conda create --name py36 python=3.6

conda activate py36

Then check if it is installed properly.

% python --version

Python 3.6.13 :: Anaconda, Inc.

2. install airflow.

Install airflow with pip. Below install command is copied from airflow doc. Make sure versions are changed in line with your local python version.

pip install 'apache-airflow==2.0.1' \

--constraint "https://raw.githubusercontent.com/apache/airflow/constraints-2.0.1/constraints-3.6.txt"

3. start airflow.

Starting airflow requires several steps.

First, set up below environment variable to install airflow in current folder.

export AIRFLOW_HOME=$(pwd)

Then run below command to init db.

airflow db init

I run into a problem in my mac, I found the solution from this blog.

After db is created, then create a new user.

airflow users create --username admin --firstname firstname --lastname lastname --role Admin --email admin@xxx.com

Then start scheduler which is used to run dags.

airflow scheduler

Keep it running and start a new terminal to run the airflow webserver.

conda activate py36

export AIRFLOW_HOME=$(pwd)

airflow webserver -p 8080

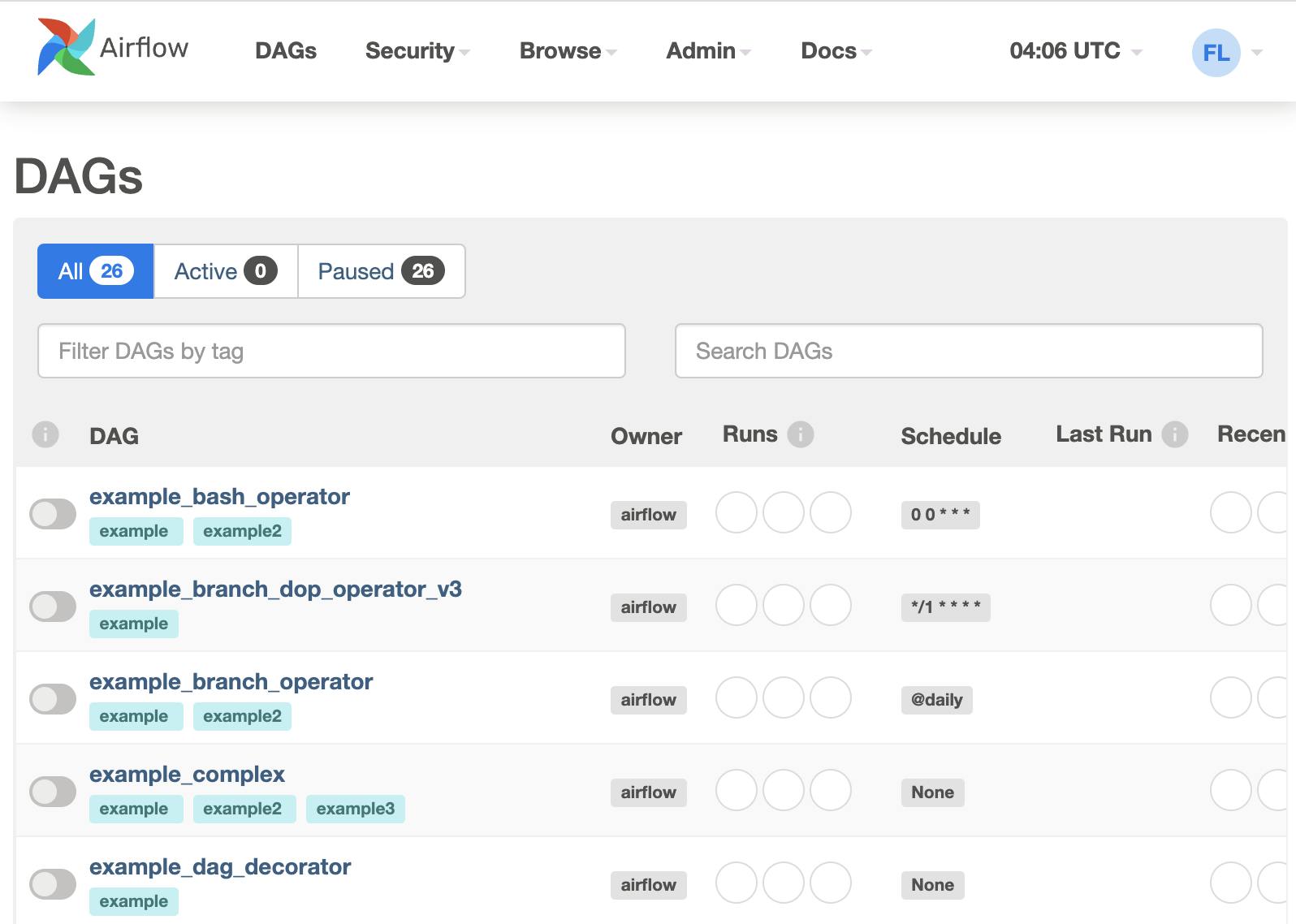

Now airflow is started, we can go to http://0.0.0.0:8080/ and login with the user account we just created.

Hello World

Now we have our local airflow environment, let write our hello world dag.

Inside the project folder, create a new folder named dags. The actual dag python files should be in this folder and airflow will try to load it automatically.

Now create a new python file hello_world.py inside folder dags with below content.

from airflow import DAG

from airflow.operators.bash import BashOperator

from datetime import datetime, timedelta

default_args = {

"owner": "123",

"retries": 5,

"retry_delay": timedelta(minutes=2)

}

with DAG(

dag_id="hello_world",

default_args=default_args,

description="my hello world dag",

start_date=datetime(2022, 10, 7),

schedule_interval="@daily",

) as dag:

task = BashOperator(

task_id="hello_world_task",

bash_command="echo hello world"

)

As you can see, we created a dag with a few parameters. The parameter default_args is used to define some basic configs. This dag will be run start from the value of parameter start_date and run with the parameter value schedule_interval.

Inside this dag, we only create one task. We could also create multiple task. These tasks forms a dag and be run in the specified order.

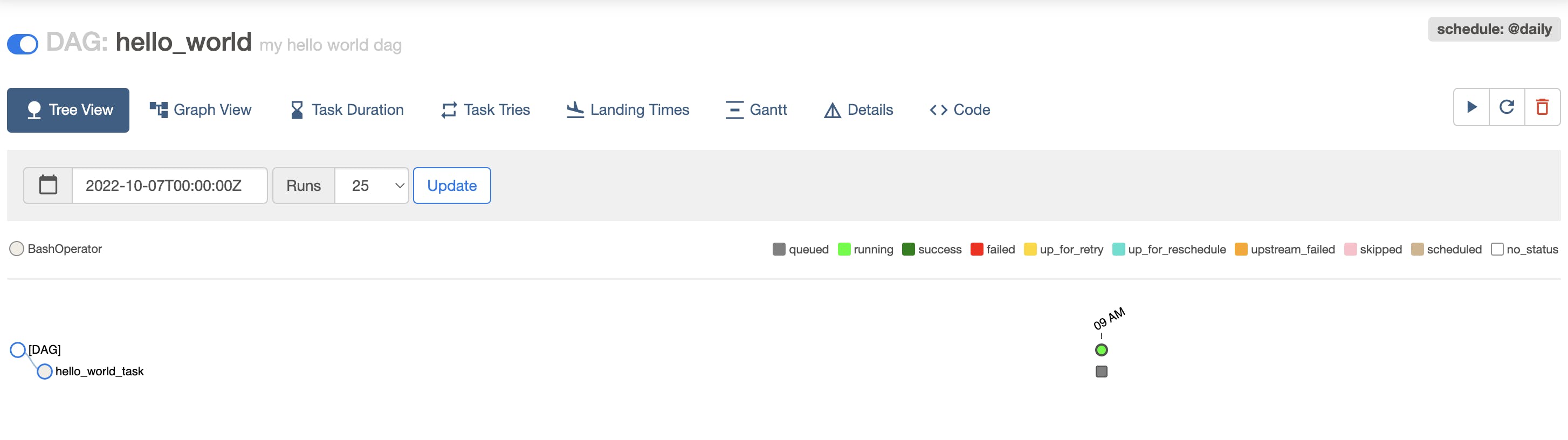

Go to the client, refresh the page, we can see this hello_world dag in the list. Click into it and start run. Then we should see a process like below.

If the dag is executed successfully, then the color will be changed into blue. We can click on the task and see its logs.

*** Reading local file: /Users/yao/Documents/GitHub/demos/todo/airflow-toturial/logs/hello_world/hello_world_task/2022-10-07T00:00:00+00:00/1.log

[2022-10-08 20:11:07,883] {taskinstance.py:851} INFO - Dependencies all met for <TaskInstance: hello_world.hello_world_task 2022-10-07T00:00:00+00:00 [queued]>

[2022-10-08 20:11:07,894] {taskinstance.py:851} INFO - Dependencies all met for <TaskInstance: hello_world.hello_world_task 2022-10-07T00:00:00+00:00 [queued]>

[2022-10-08 20:11:07,894] {taskinstance.py:1042} INFO -

--------------------------------------------------------------------------------

[2022-10-08 20:11:07,894] {taskinstance.py:1043} INFO - Starting attempt 1 of 6

[2022-10-08 20:11:07,894] {taskinstance.py:1044} INFO -

--------------------------------------------------------------------------------

[2022-10-08 20:11:07,901] {taskinstance.py:1063} INFO - Executing <Task(BashOperator): hello_world_task> on 2022-10-07T00:00:00+00:00

[2022-10-08 20:11:07,908] {standard_task_runner.py:52} INFO - Started process 7627 to run task

[2022-10-08 20:11:07,916] {standard_task_runner.py:76} INFO - Running: ['airflow', 'tasks', 'run', 'hello_world', 'hello_world_task', '2022-10-07T00:00:00+00:00', '--job-id', '79', '--pool', 'default_pool', '--raw', '--subdir', 'DAGS_FOLDER/my_dag.py', '--cfg-path', '/var/folders/5l/hkzdrtyd3x3d0b1cwmkzn9z40000gn/T/tmp8o1wtpgp', '--error-file', '/var/folders/5l/hkzdrtyd3x3d0b1cwmkzn9z40000gn/T/tmphijxje_0']

[2022-10-08 20:11:07,919] {standard_task_runner.py:77} INFO - Job 79: Subtask hello_world_task

[2022-10-08 20:11:12,948] {logging_mixin.py:104} INFO - Running <TaskInstance: hello_world.hello_world_task 2022-10-07T00:00:00+00:00 [running]> on host Michaels-MacBook-Air.local

[2022-10-08 20:11:17,989] {taskinstance.py:1257} INFO - Exporting the following env vars:

AIRFLOW_CTX_DAG_OWNER=123

AIRFLOW_CTX_DAG_ID=hello_world

AIRFLOW_CTX_TASK_ID=hello_world_task

AIRFLOW_CTX_EXECUTION_DATE=2022-10-07T00:00:00+00:00

AIRFLOW_CTX_DAG_RUN_ID=scheduled__2022-10-07T00:00:00+00:00

[2022-10-08 20:11:17,990] {bash.py:135} INFO - Tmp dir root location:

/var/folders/5l/hkzdrtyd3x3d0b1cwmkzn9z40000gn/T

[2022-10-08 20:11:17,990] {bash.py:158} INFO - Running command: echo hello world

[2022-10-08 20:11:17,997] {bash.py:169} INFO - Output:

[2022-10-08 20:11:18,008] {bash.py:173} INFO - hello world

[2022-10-08 20:11:18,008] {bash.py:177} INFO - Command exited with return code 0

[2022-10-08 20:11:18,015] {taskinstance.py:1166} INFO - Marking task as SUCCESS. dag_id=hello_world, task_id=hello_world_task, execution_date=20221007T000000, start_date=20221008T111107, end_date=20221008T111118

[2022-10-08 20:11:18,020] {taskinstance.py:1220} INFO - 0 downstream tasks scheduled from follow-on schedule check

[2022-10-08 20:11:18,065] {local_task_job.py:146} INFO - Task exited with return code 0

As you can see, the echo hello world is printed in the log.

More Details

Now let's dig into some details. In hello world example, there is only one task which defined by BashOperator. Now let's define a task with python code by PythonOperator.

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime, timedelta

default_args = {

"owner": "123",

"retries": 5,

"retry_delay": timedelta(minutes=2)

}

def greet():

print("hello you")

with DAG(

dag_id="dag_details",

default_args=default_args,

description="my details dag",

start_date=datetime(2022, 10, 7),

schedule_interval="@daily",

) as dag:

task = PythonOperator(

task_id="details_task",

python_callable=greet

)

This time, the task will be the python function we defined.

Now let make some changes. Instead of only one task, we can define many tasks.

def greet():

print("hello you")

def get_name():

print("Michael")

def get_age():

print(20)

with DAG(

dag_id="dag_details",

default_args=default_args,

description="my details dag",

start_date=datetime(2022, 10, 7),

schedule_interval="@daily",

) as dag:

t1 = PythonOperator(

task_id="details_task1",

python_callable=greet

)

t2 = PythonOperator(

task_id="details_task2",

python_callable=get_name

)

t3 = PythonOperator(

task_id="details_task3",

python_callable=get_age

)

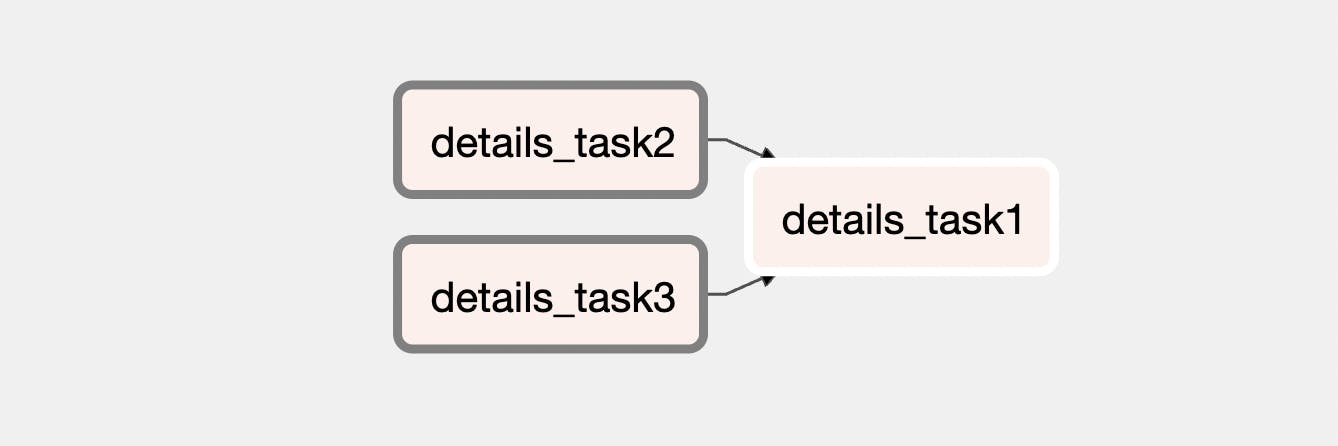

And we can also specify the execution order.

# first run t2 and t3, then run t1

[t2, t3] >> t

Then the dag graph will be like below.

If the task function needs some parameters, we can pass them inside the dag.

def greet(name, age):

print("hello ", name, age)

# ...

t1 = PythonOperator(

task_id="details_task1",

python_callable=greet,

op_kwargs={"name": "Michael", "age": 20}

)

If we want to pass values between tasks, we can use the built-in xcoms object.

# pull values from xcom

def greet(ti):

first_name = ti.xcom_pull(task_ids="get_name", key="first_name")

last_name = ti.xcom_pull(task_ids="get_name", key="last_name")

# push values into xcom

def get_name(ti):

ti.xcom_push(key="first_name", value="Jerry")

ti.xcom_push(key="last_name", value="Tom")

The ti parameter will be passed automatically by airflow. So we can sharing information between tasks by pushing and pulling values from/to this object.

Then is the schedule_interval parameter. In above we schedule the tasks to be run daily with the string value. We can also define very detailed scheduling with cron syntax. Using crontab.guru to craft your scheduling is recommended.

The last important detail I think is the start_date parameter. For example, if we specify the start date as 2022/10/01 and current date is 2022/10/08 and the dag is scheduled daily. Then when this dag is started, it will try to run from 10/1 to 10/7 and 7 times in total. So all the tasks needs to be run will be run. If we don't want this behavior and want to make it ignore the past, we can set catchup parameter as false, then it will just be run once.